When a member of the Constitutional Democratic Party, Kazuma Nakatani, recently asked Prime Minister Fumio Kishida questions generated by ChatGPT during a Diet session, it ignited a discussion that’s been mostly absent from Japan over the past several months.

Nakatani asked the chatbot, “If you were a lower house Diet member, what kind of questions would you ask the prime minister?”

ChatGPT posed, in relation to a recent bill amendment, “Are the opinions of local government and healthcare professionals being sufficiently reflected? And could you please tell me about the reactions of those involved in regard to the revised bill?”

The responses, generated by both Kishida and the AI, form my favorite part of the discussion:

Kishida: “This bill has been amended to sufficiently respond to the opinions and requests (of involved parties).”

ChatGPT: “(The proposed amendment bill) is working to sufficiently reflect the opinions of local government and healthcare professionals.”

In the first of this tenuously connected series of columns, I wrote that Kishida had “the charisma of an automated answering machine.” Little did I know how right that would prove to be. But just because the above answers are boring and similar, doesn’t mean they’re unrevealing. Here we have a language learning model giving us about as much insight into government machinations as a sitting prime minister. Is it any wonder Japan is so politically disenfranchised?

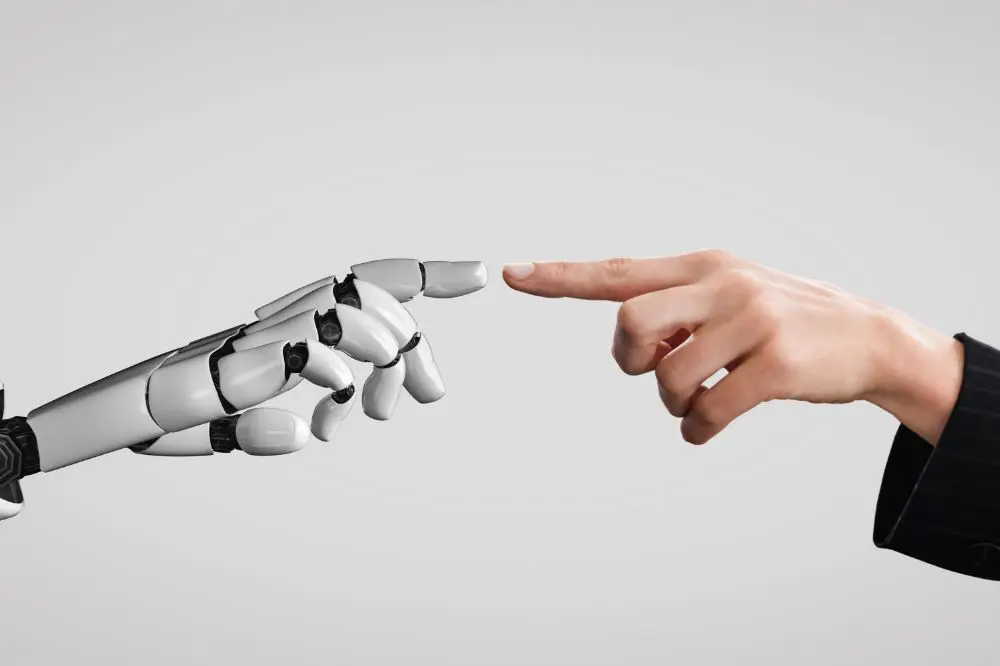

It is, however, important to discuss what ChatGPT is and isn’t. It’s not a thinking machine. It has no consciousness through which it filters thoughts, no facility for interpreting the world around it, nor the ability to extrapolate ideas based on subjective experience. Here, it’s concerned with emulating a Kishida-esque response by generating syntax structures achieved through algorithms rather than any complex understanding of the semantic nature of language.

I’ll prove it to you. Here’s a little exchange I had with ChatGPT:

Me: “How do you generate answers to questions? Do you have a complex understanding of the semantic nature of language?”

ChatGPT: “As a language model, I generate answers to questions using a combination of statistical patterns learned from large amounts of text data and advanced natural language processing algorithms…. When presented with a question, I analyze the question to understand its meaning, identify relevant information, and then generate a response based on the patterns and relationships I have learned from my training data. I also use techniques such as language modeling and information retrieval to generate responses that are both relevant and grammatically correct. While I do not possess a human-like understanding of language, I am able to generate responses that are often quite sophisticated, and I can provide useful and informative answers to a wide variety of questions.”

It’s because of this probabilistic approach that ChatGPT is better at emulating the responses of someone like Kishida, as opposed to a wildly unpredictable public figure like Donald Trump. A ChatGPT response generated in the fashion of Donald Trump typically starts with, “Nobody knows more about [insert topic] than I do,” then trends towards inaccuracy.

The main point being: ChatGPT is often pretty good at giving the impression of being accurate, without ever knowing if it is. And sometimes, it just isn’t.

Like most writers, I became interested in ChatGPT the moment I first read about it. I wanted to assess its ability to construct prose — stolid, albeit improving — and to determine whether or not my career was in jeopardy.

I soon realized its possibilities lay elsewhere. Firstly, it’s fun to play with. Ask it to write an academic thesis on “Why Cured Meats are a Force for Good in the World” or “Why All People with the Name David are Destined for Greatness” and you’ll see what I mean.

Secondly, the Google search engine is a wheezing ember of its former self; prioritizing click-bait publications thanks to the tyranny of SEO, making incorrect algorithmic assumptions about the news sources I trust and burying information it does not want me to find. ChatGPT, in spite of its proclivity for falsehoods, offered a speedier and more targeted approach to research.

But in those halcyon days of last autumn, when ChatGPT was first released, it generated few tremors in Japan’s public consciousness. That is no longer the case.

Panasonic Connect recently instated a ChatGPT project leader. The company has forbidden employees from disclosing confidential information when using it, while also insisting that humans must check all of the AI’s outputs. SoftBank and Hitachi have, likewise, restricted the use of ChatGPT in their respective business operations, while major banks, including MUFG, SMBC and Mizuho, have taken similar stances. LayerX, a Tokyo-based fintech startup, has gone one step further: requiring all new hires to be proficient at using the chatbot and deciphering its inaccuracies.

This is only the beginning. Sam Altman, CEO of ChatGPT creator OpenAI, has announced that the company will soon open an office in Japan. It’s a move that makes practical sense. Altman claims Japan has more than 1 million daily users of the service, while the country contributed one of the biggest recent breakthroughs in the AI field: a study at Osaka University that used a deep learning model to generate images by decoding human brain activity.

Such advancements in AI have provided fertile grounds for existential debate. The Future of Life Institute, which aims to mitigate the risks of innovative technologies, penned an open letter at the end of March calling on “all AI labs to immediately pause for at least six months the training of AI systems more powerful than GPT-4,” referring to ChatGPT’s latest iteration. The signatories include OpenAI co-founder Elon Musk and Apple co-founder Steve Wozniak, who hope to see the necessary safeguards in place before training continues.

Disconcerting as it may be, however, this is the world we now live in: one where every new AI breakthrough signifies one step closer to unleashing a genie that we can’t put back in the bottle.